Translating responsible AI principles into practice with our new AI Governance Portal

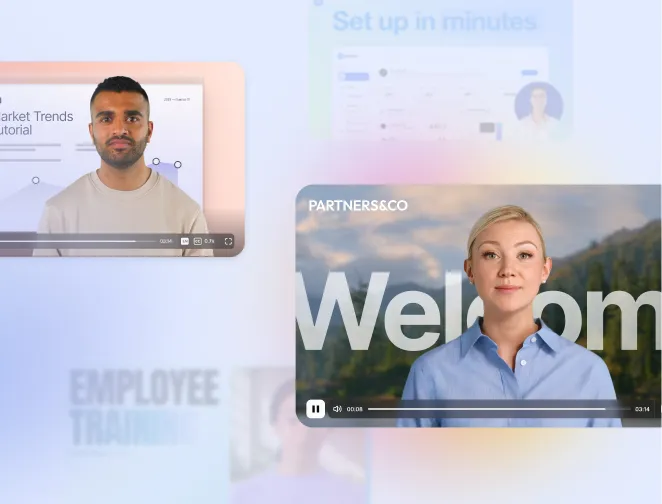

Create AI videos with 240+ avatars in 160+ languages.

At Synthesia, we have always believed that building AI responsibly means embedding governance from the ground up. Before clear regulatory frameworks or technical standards emerged, we were already considering how fairness, safety, and accountability play a role in the way we develop and deploy our technology.

Today, we’re introducing our most comprehensive approach to AI governance, guided by our 3Cs framework: consent, control, and collaboration. These principles shape how we design safeguards, structure our policies, and engage with our customers and partners. Now, we are making the 3Cs framework easier to explore and evaluate with the launch of the AI Governance Portal.

Why we built this portal

As AI adoption accelerates, customers are looking for more than innovation. They are looking for assurance.

Enterprise buyers need clarity around how their data will be handled, how content is moderated, and how a vendor manages risk. Our customers’ legal, privacy, and compliance teams are increasingly part of the buying process, and they want transparency backed by substance.

The AI Governance Portal is designed for these stakeholders. It offers a single, structured location to evaluate how Synthesia approaches governance across the full lifecycle of our platform. It supports easier due diligence, builds trust with decision-makers, and reflects our commitment to transparency.

What the portal covers

The portal is organized into three core categories that reflect our broader approach to responsible AI:

Trust and Assurance

This category covers our legal, privacy, and security foundations. It includes our commitments to data protection, our adherence to existing regulations and leading standards such as UK Cyber Essentials, SOC2 Type II, ISO 42001, and the policies and frameworks that guide how we handle data and manage compliance across jurisdictions.

Content and Delivery

Here, we focus on the safeguards built into the platform itself. From how content is moderated and approved, to how users and avatars are managed within workspaces, this category demonstrates how Synthesia helps ensure safe, consistent, and brand-aligned content creation at scale.

Community and Enablement

Responsible AI is not a solo effort. This section highlights how we support our customers and contributors, from live customer sessions and the Synthesia Academy to our Talent Experience Program and AI Futures Council, and collaborations with partners like the Partnership on AI, Responsible Innovation Labs and the Content Authenticity Initiative.

From principles to practice

Our AI Governance Portal reflects how we translate our values into action. It helps customers and stakeholders gain confidence in how Synthesia is built, governed, and used, reinforcing trust in the platform as they adopt AI in their organizations.

AI governance isn't a one-time task. It's an ongoing pledge to develop technology thoughtfully, ensuring openness and diligence at all stages. This platform is designed to showcase our dedication to this endeavor.

About the author

Head of Product & Privacy, Legal

Sasa Markota

Sasa Markota is the head of product and privacy in the legal team at Synthesia, He leads initiatives that harmonize product innovation with data protection standards, ensuring our AI-driven video solutions comply with global regulations like GDPR and the forthcoming EU AI Act. With over seven years of legal experience supporting IT enterprises, Sasa specializes in translating complex legal frameworks into actionable strategies that facilitate responsible AI development.