Welcome to Synthesia AI Research

Synthesia is the world's leader in synthetic media since 2017. We solve fundamental AI problems and build awesome products around them.

About our AI research

.webp)

About our AI avatars

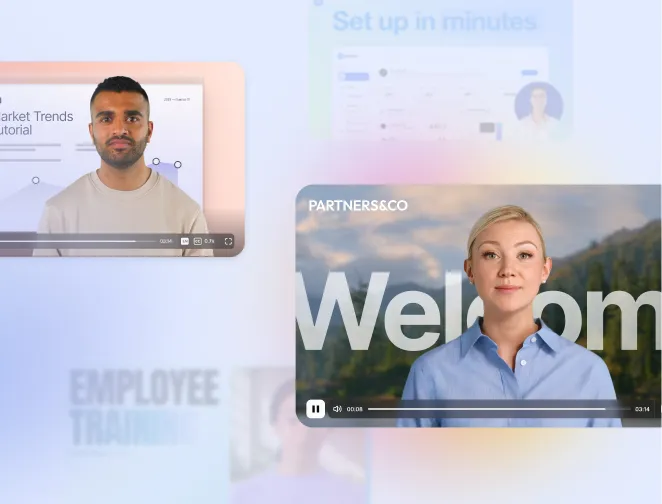

Our mission is to make video creation easy. We generate lifelike synthetic humans and create a performance directly from a script, a process we call text-to-video (TTV).

What makes this hard? We need to match the quality you see in conventional video. The challenge is that we are all accustomed to the subtle nuances of how a person should look, act and sound in a video. Everything that makes a person look real. Any approximation ruins the illusion and you hit the "uncanny valley."

At Synthesia, we solve this through neural video synthesis. We train neural networks to reproduce the photorealistic look and movements you see in existing videos. We have traversed the uncanny valley and we create synthetic humans that look real.

Our open source research

HumanRF research paper

HumanRF enables temporally stable, novel-view synthesis of humans in motion. It reconstructs long sequences with the state-of-the-art quality and high compression rates by adaptively partitioning the temporal domain into 4D-decomposed feature grids.

ActorsHQ dataset

ActorsHQ is our high-fidelity publicly available dataset of clothed humans in motion. The dataset features multi-view recordings of 160 synchronized cameras that simultaneously capture individual video streams of 12MP each. As such, the dataset is tailored for the tasks of photo-realistic novel view and novel pose synthesis of humans.

Our research challenges

Our goal is high-fidelity, photorealistic and controllable neural video synthesis. We are replacing physical cameras with generative synthesis. We conduct foundational research with our co-founders Prof. Matthias Niessner (TUM) and Prof. Lourdes Agapito (UCL) to develop 3D neural rendering techniques that synthesize realistic video.

Here's some of the problems we tackle today.

Scenes

We create learned 2D and 3D neural representations of shape appearance and motion for controllable video synthesis of people.

Efficiency

We create efficient representations that can be quickly trained and deployed as a fast runtime for video synthesis.

Generalization

We aim to provide complete freedom to create new content while minimizing the time required to record training data.

Speech

We create facial expressions that match verbal cues for speech to create a physically plausible representation in any language.

Dynamics

We generate nonverbal cues and body language to create an emotional, expressive, and engaging performance in the video.

Synthesia AI Research leadership

Matthias Niessner

I'm a co-founder of Synthesia and a Professor at the Technical University of Munich where I lead the Visual Computing Lab.

Lourdes Agapito

I'm a co-founder of Synthesia and a Professor of 3D Vision in the Department of Computer Science at University College London (UCL).

Our ethics standards

People first. Always.

As a pioneer in synthetic media, we are aware of the responsibility we have. It is clear to us that artificial intelligence cannot be built with ethics as an afterthought. They need to be front and center, an integral part of the company - reflected in both company policy, in the technology we are building, and in our commitment to security. This is also the reason why we are an active member of the Content Authenticity Initiative, which was started by Adobe in 2019.

Ethical content moderation

All AI video content goes through our content moderation process before being released to our clients. Read more about our content moderation framework and see our content moderation course.

AI consent priority

We will never re-enact someone without their explicit consent. This includes politicians or celebrities for satirical purposes. As an example, our NGO campaign with Malaria Must Die and David Beckham won the CogX Outstanding Achievement in Social Good Use Of AI Award in 2019.

Guiding industry standards

We actively work with media organisations, governments and research institutions to develop best practices and educate on video synthesis technologies.

Want to join our AI Research team?

We're constantly on the lookout for outstanding AI researchers. See our current openings below.

Want to see who we are and how we work?

Try Synthesia with a free video.

Simply type in text and get a free video with an AI avatar in a few clicks. No signup or credit card required.