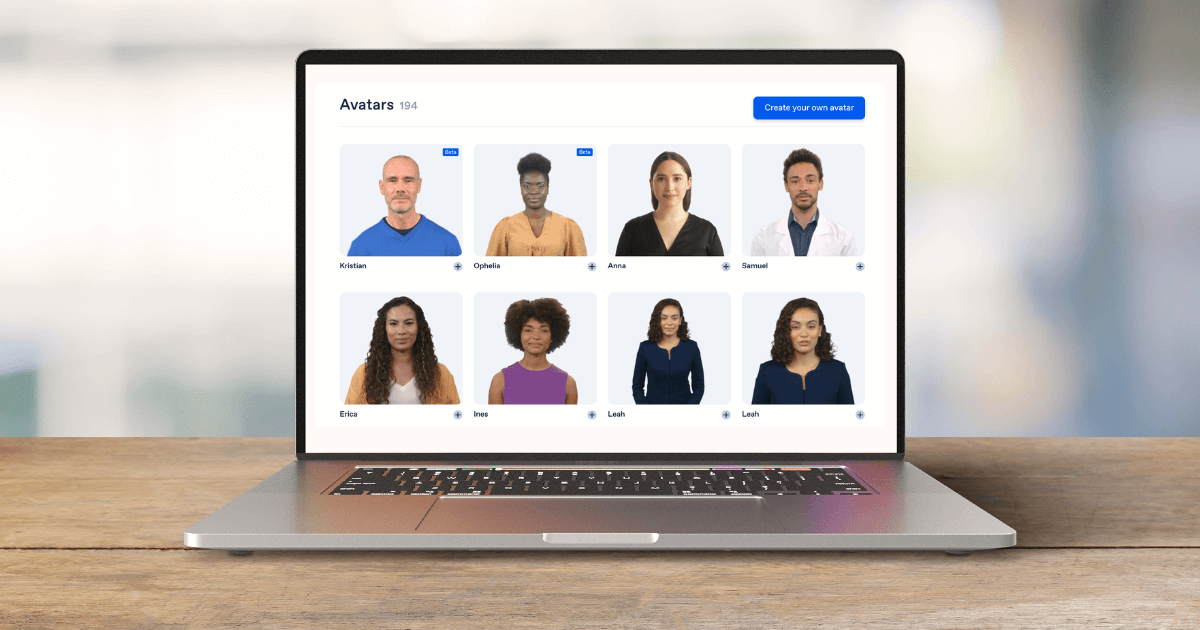

Create AI videos with 240+ avatars in 160+ languages.

Localize any training video into 130+ languages

To dub, or not to dub — that is the question.

It’s a debate that sparks strong reactions, especially among cinephiles who worry about artistic intent.

But this article isn’t about art. It’s about learning, and what makes learning actually stick.

Why cognitive load determines whether training sticks

At the center of effective learning is a simple constraint: people have limited mental capacity at any given moment.

Cognitive science refers to this limit as cognitive load — the mental effort required to process information while learning.

Research in instructional design consistently emphasizes minimizing competing demands on attention, such as asking learners to read on-screen text, listen to audio, and track visuals at the same time.

And in today’s attention economy we need to maintain that attention if we hope to deliver impactful learning.

For instructional designers, this challenge is especially consequential in video, where learners must process language while coordinating explanations, visuals, and actions as they unfold over time.

If attention is the constraint, localization is the lever.

So let’s talk about how to pull that lever.

Instructional designers make countless localization decisions, but few matter as much as how language is delivered in learning videos — whether learners read subtitles or hear dubbed narration.

How subtitling shapes attention

Subtitles are often the default localization choice. I’ve certainly made that choice myself — for example, when rolling out a global onboarding experience for new hires.

Subtitles work well in learning contexts where learners revisit content, scan for information, or use the video as a reference.

Like a welcome video from a CEO that introduces the company's history and values. Whether it’s subtitled in the same language or translated into dozens of others, adding subtitles here doesn’t meaningfully increase cognitive load.

Why?

Because new hires aren’t being asked to do anything. The information may be new, but the goal is orientation, not execution.

Unless your new hires are about to compete in a company history trivia contest, there’s no time-sensitive moment where recall determines success. Learners can revisit the video as often as they need.

The shift happens when learning moves from context to action.

Now imagine those same new hires learning an SOP. The training video shows a process unfolding step by step, with visuals, screen captures, or diagrams. There's knowledge checks and branching scenarios, asking the new hires to interact with the video.

If you add subtitles on top of that, learners are suddenly asked to read language while tracking visuals and coordinating actions as they happen.

Attention splits.

Whether that helps or hinders learning depends on what the learner needs to do next.

How dubbing reshapes attention

Sometimes, it simply makes more sense to dub.

I once facilitated a manager training that introduced Stephen B. Karpman’s Drama Triangle (a framework for understanding conflict dynamics and fostering accountability on teams). As part of that training, I asked participants to watch a short explainer video on the model before role-playing scenarios that mirrored these dynamics.

The video uses whiteboard animation, and there’s a lot happening at once. The narrator explains the model while illustrations are drawn in real time. Labels appear. Relationships take shape visually. Meaning is constructed through the coordination of explanation and image.

Adding subtitles on top of that would do more than add language. It would introduce additional visual complexity and pull attention away from the drawing as it emerges.

Learners would be asked to read, watch, and interpret at the same time — exactly the kind of split attention that increases cognitive load.

In this case, dubbing is the better option because it keeps language off the screen while the concept is being constructed visually. That allows learners to follow the animation as it unfolds, without having to split attention between reading and watching at the same time.

Choosing between subtitles and dubbing

⚠️ Dubbing frees visual attention only when narration is well timed, clearly delivered, and paced to match the visuals. Poor synchronization quickly negates the benefit.

What makes localized video training stick

Training fails because attention is finite — and too often, we spend our time as learning and development professionals managing the medium instead of understanding the work.

Subtitles and dubbing aren’t interchangeable localization options. They’re learning design decisions that determine where attention goes at the moments performance depends on it.

When those decisions align with how learners need to process information — what they need to watch, when they need to listen, and how ideas unfold over time — learning has a chance to stick.

For teams focused on upskilling and behavior change, this isn’t about choosing the “right” format. It’s about designing learning experiences that respect cognitive limits and direct attention intentionally. Because if learners can’t focus on what matters, no amount of translation will create impact.

If attention is the constraint, localization is the lever.

The question is whether we’re pulling it with learning in mind.

About the author

Learning and Development Evangelist

Amy Vidor

Amy Vidor, PhD is a Learning & Development Evangelist at Synthesia, where she researches emerging learning trends and helps organizations apply AI to learning at scale. With 15 years of experience across the public and private sectors, she has advised high-growth technology companies, government agencies, and higher education institutions on modernizing how people build skills and capability. Her work focuses on translating complex expertise into practical, scalable learning and examining how AI is reshaping development, performance, and the future of work.

Is dubbing always better than subtitles for training?

No. Learning science supports choosing the modality that best fits the task. Dubbing often helps when learners need to track actions and timing in real time. Subtitles can work well for reference content, scanning, and review.

When do subtitles work well in enterprise learning?

Subtitles work well when learners are revisiting content, scanning for specific information, or using training as a reference library. They also support accessibility and language acquisition in some contexts.

When is dubbing a better fit for learning videos?

Dubbing tends to be a better fit for procedural training, system walkthroughs, and safety scenarios where learners need to keep their eyes on the action while processing language through audio.

How does accessibility fit into the subtitles vs. dubbing conversation?

Accessibility is a requirement, not a trade-off. Captions and transcripts should be provided to support inclusive learning. The learning design question is how to coordinate accessibility features with audio and visuals so they support learning without overloading attention during critical moments.

Is this a localization decision or a learning design decision?

Both. Subtitles and dubbing are often treated as localization workflow decisions. In learning contexts, they’re also design decisions that shape attention and cognitive load, which influences whether training leads to consistent performance.

How should teams think about subtitles and dubbing when using AI?

AI makes it easier to scale translation and voice. Learning outcomes still depend on design quality: timing, segmentation, synchronization, and clarity. AI expands execution capacity; learning science guides how to use it well.