Create AI videos with 240+ avatars in 160+ languages.

People connect with faces, not text.

Digital humans bring expression, gaze, and nuance to AI-generated content, bridging the gap between automation and authentic human communication.

What are digital humans?

Digital humans are human-like virtual beings that represent people or brands in digital environments. When you see "digital humans" in this article, we're referring to AI-generated presenters and conversational agents that look and sound human.

Think of them as a sophisticated evolution of animated avatars. While avatars can be cartoonish or abstract, digital humans are photorealistic—they're designed to be indistinguishable from real people. This realism isn't just about appearance; it's about creating genuine connections through communication.

Digital humans operate through three integrated layers:

- Visual persona: Face and body rendering, lip-sync, gaze tracking, and natural gestures

- Voice: Neural text-to-speech or consented voice cloning for authentic speech

- Intelligence: Scripted logic or LLM-driven dialogue for context-aware responses

They can appear in different deployment modes depending on your needs.

Pre-rendered videos offer the highest fidelity and brand control—perfect for training modules or marketing content.

Real-time "live" AI avatars enable two-way interaction for customer support.

Embedded agents work inside apps and websites for guided experiences.

What do digital humans do?

If you're battling long production cycles, low engagement in training, or inconsistent messaging across markets, digital humans give you a consistent face and voice you can update in a few hours.

They recreate human interaction at scale, addressing the fundamental need for personal connection in digital communication.

Non-verbal cues like gaze, facial micro-expressions, and prosody are critical to how we build rapport and understand intent. Digital humans leverage these elements to create more engaging experiences. Faces and voices increase attention and recall in procedural content, which is why you're seeing them used for complex explainers and training materials.

Beyond training, digital humans excel at providing personalized experiences across various touchpoints.

They can guide customers through troubleshooting processes, deliver leadership messages with consistent tone and presence, or present product demonstrations in multiple languages—all while maintaining the human element that text-based systems lack.

Where are digital humans used?

Digital humans are rapidly entering everyday business operations, with the digital human economy projected to exceed $125 billion by 2035. Here's where they're making the biggest impact:

- Customer support: A "face on the FAQ" guiding troubleshooting and hand-offs, reducing ticket volume for common issues

- Training & L&D: Compliance refreshers, product how-tos, role-play scenarios that are easy to update and localize

- Healthcare assistants: Patient education and navigation with human oversight and regulatory safeguards

- Marketing & sales: Product tours, announcements, and regionalized explainers that ship in multiple languages

- Internal communications: Consistent delivery of leadership messages and change-management updates

Examples of digital humans

The examples below are some of my favorites applications of human digital avatars.

If you see one you like, you can click 'Edit video template', sign up to Synthesia (for free), and then use the template to create your own digital human AI video.

1) Training video

For training and educational videos, a digital human works well because it mirrors the classroom dynamic. Learners are used to receiving instruction from a person, and a visible instructor can help structure the lesson, highlight key points, and make complex topics feel more approachable.

2) Interactive quiz video

In quizzes and interactive videos, a digital human helps guide the learner through the experience, explain questions, and provide feedback in a way that feels more like a conversation than a test. Seeing a presenter introduces a sense of presence and pacing, which can make learners more comfortable and focused as they move through each step.

3) Product demo video

In product demos and tours, a digital human can act as a guide who walks the viewer through features and workflows. This human layer helps frame what they are seeing on screen, provides context for why something matters, and creates a smoother narrative than screen recordings alone.

4) Sales outreach video

In sales and outreach videos, a digital human makes the message feel more personal than text or slides. It allows the presenter to address the viewer directly, introduce an offer or idea, and communicate tone and intent clearly, which is especially important in first-touch or follow-up communication.

5) Business presentation video

For corporate updates and business presentations, a digital human adds clarity and structure. A presenter can introduce topics, transition between sections, and emphasize key messages, helping the audience follow along as they would in a live meeting or all-hands presentation.

6) Knowledge base video

In knowledge base videos, a digital human can provide quick orientation and explanation. A visible narrator helps set context, explain processes, and make otherwise static information feel more guided and easier to absorb.

7) Recruitment video

In recruitment and HR content, a digital human is useful for introducing the company, explaining roles or processes, and setting expectations. Seeing a person speak can help candidates and employees better understand who they are hearing from and what the organization is like, making the information feel more relatable and grounded.

8) Social media video

For social media videos, a digital human helps capture attention quickly and build a personal connection in short-form content. A presenter can deliver messages directly to the camera, making announcements, tips, or thought leadership feel more authentic and engaging in fast-scrolling feeds.

9. Educational video

In educational videos, a digital human supports explanation and continuity across lessons. Seeing the same instructor helps learners follow complex topics, maintain focus, and build familiarity throughout a course or learning program.

10. SOP video

For standard operating procedure videos, a digital human can introduce each step, explain why it matters, and guide viewers through the workflow. This human narration reduces ambiguity and makes procedural content easier to follow and remember.

11. Marketing video

In marketing videos, a digital human helps humanize the brand and clearly communicate value propositions. A presenter can frame benefits, tell short stories, and build trust in a way that feels more persuasive than slides or text alone.

12. Onboarding video

In onboarding videos, a digital human creates a welcoming first impression and sets expectations for new employees or customers. A visible host can guide viewers through what to expect and what to do next, making the experience feel more personal and reassuring.

13. Microlearning video

In microlearning videos, a digital human delivers short, focused explanations that feel direct and easy to digest. A presenter can introduce a single concept, provide quick context, and reinforce key takeaways in a highly efficient format.

14. Company culture video

In company culture videos, a digital human helps convey values, mission, and ways of working through real human presence. Seeing a spokesperson or leader speak makes cultural messages feel more authentic and emotionally engaging.

How do you make a digital human?

Creating a digital human is more straightforward than you might think. Here's the streamlined workflow:

- Choose your avatar type: Personal avatar (your likeness), studio avatar (professionally captured), or a "stock" avatar (based on actors)

- Provide a few minutes of on-camera footage (in a studio, or on your webcam or phone) and provide consent

- The system trains lip-sync, gaze, and micro-gestures; you select a voice (AI voices or multilingual voice cloning)

- Generate videos from text; use dialogue for multi-speaker scenes; adapt to new markets with 1-click translation

What are the technologies used in developing digital humans?

Creating convincing digital humans requires a sophisticated blend of technologies that address both appearance and communication:

- Motion capture to serve as a basis for 3D modeling

- 3D modeling to create realistic face and body representations

- Natural language processing to understand voice commands and context

- Natural language generation to form appropriate responses

- Artificial intelligence to process input and learn from patterns

Two key distinctions shape how digital humans are deployed:

- Pre-rendered vs real-time: Use pre-rendered when fidelity, brand approvals, and localization matter; use real-time when latency and two-way conversation are core

- Scripted vs LLM-driven: Scripted for accuracy and compliance; LLM-driven when you need retrieval, Q&A, and tool use—always with guardrails

The uncanny valley

The uncanny valley occurs when a synthetic human looks almost real but something feels slightly off, causing unease. It's best to tune blink and gaze rates, reduce extreme head motion, and align pauses to visual beats to avoid that "robotic" feel.

Why do we need digital humans?

Digital humans address real business challenges while augmenting teams rather than replacing them. Here's the value they deliver:

- Operate 24/7 without scheduling constraints—perfect for global teams and customers

- Consistent, on-brand delivery across regions and languages

- Faster updates when policies, pricing, or processes change

- Enhanced accessibility: captions, multilingual delivery, and culturally appropriate presenters

- Safe spaces for sensitive topics with clear disclosure

Be sure to keep humans in the loop for high-stakes topics and make it obvious when users are engaging with AI. This transparency builds trust while leveraging the efficiency benefits of digital human technology.

What are the risks of digital humans?

Like any transformative technology, digital humans come with considerations that require thoughtful implementation:

1. The uncanny valley

When people realize they're interacting with AI, trust can erode.

Mitigation: User testing, style tuning, and preferring pre-rendered content for critical communications.

2. User privacy

Human-like interactions may lead to oversharing of personal information.

Mitigation: Data minimization, consent capture, and secure log storage.

3. Ethics and bias

Stereotypes can surface in avatar selection and representation.

Mitigation: Diverse avatar library, local cultural review, and avoiding stereotypical portrayals.

4. Human interactions

Over-reliance on digital humans could impact genuine human connections.

Mitigation: Clear AI disclosure and easy routing to human support.

5. Identity and deepfakes

Potential for misuse in creating unauthorized representations.

Mitigation: Watermark AI videos, provenance logging, and contractual controls on likeness use.

Implementation playbook

Ready to get started with digital humans? Follow these five steps for successful implementation:

- Pick one high-value use case (e.g., onboarding module or FAQ explainer)

- Draft for speech (short lines, one idea per sentence, add emphasis cues)

- Choose avatar type and voice; run a cultural and brand check

- Pilot in one market; measure completion rate, dwell time, and CSAT

- Localize with 1-Click Translation; roll out variants; set governance rules

The future of human interaction

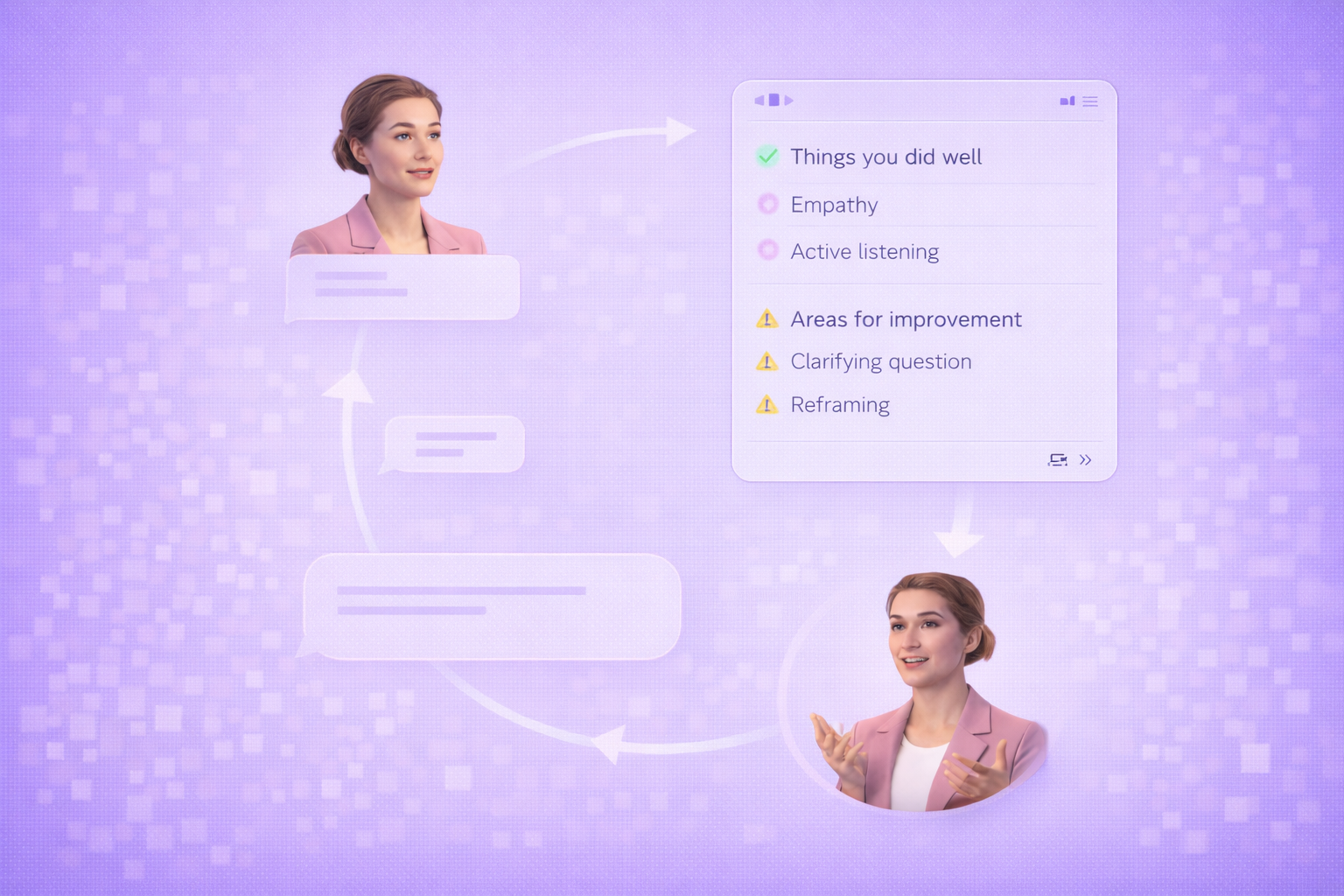

Digital humans are already humanizing virtual interactions and impacting how businesses operate. Expect agentic video—digital humans that can ask clarifying questions, query your knowledge base, and take actions. You'll see fewer "watch-only" videos and more "do-with-me" flows that guide users through complex processes.

Individuals are taking on new forms of digital identities. Businesses are accelerating their communications. Society is entering a new era of social interaction.

About the author

Video Editor

Kyle Odefey

Kyle Odefey is a London-based filmmaker and content producer with over seven years of professional production experience across film, TV and digital media. As a Video Editor at Synthesia, the world's leading AI video platform, his content has reached millions on TikTok, LinkedIn, and YouTube, even inspiring a Saturday Night Live sketch. Kyle has collaborated with high-profile figures including Sadiq Khan and Jamie Redknapp, and his work has been featured on CNBC, BBC, Forbes, and MIT Technology Review. With a strong background in both traditional filmmaking and AI-driven video, Kyle brings a unique perspective on how storytelling and emerging technology intersect to shape the future of content.

What is a digital human?

A digital human is a photorealistic virtual being that looks, sounds, and communicates like a real person through AI technology. Unlike basic avatars or chatbots, digital humans combine lifelike appearance with natural speech, facial expressions, and body language to create authentic connections in digital environments.

These AI-powered presenters can represent real people as digital twins or exist as fictional characters, serving as the face of your brand across training videos, customer support, and marketing content. By recreating human interaction at scale, digital humans bridge the gap between automated efficiency and the personal touch that builds trust and engagement in business communications.

What are practical examples of digital humans in business today?

Digital humans are transforming how businesses communicate across multiple touchpoints. In customer support, they serve as always-available representatives who guide users through troubleshooting processes with a friendly face, reducing ticket volume for common issues. For learning and development teams, digital humans deliver consistent compliance training and product demonstrations that can be updated instantly and localized for global audiences.

Marketing teams use digital humans to create personalized product tours and announcements that ship in multiple languages without reshooting. Healthcare organizations deploy them for patient education with appropriate oversight, while internal communications teams ensure leadership messages maintain consistent tone and presence across all regions. These applications demonstrate how digital humans make professional video content scalable and accessible for teams of any size.

How do I create a digital human with Synthesia?

Creating a digital human with Synthesia takes just minutes and requires no technical expertise. You can choose from over 230 professionally captured studio avatars, or create a personal avatar by recording 15 minutes of footage using your webcam or phone. After providing consent for your likeness, the AI processes your footage to create a digital twin that captures your appearance and can speak in your voice or any of the available AI voices.

Once your digital human is ready, simply type your script and the platform generates a video with natural speech, expressions, and gestures. You can create multi-speaker dialogues, add screen recordings, and adapt content for new markets with one-click translation. This streamlined process transforms weeks of traditional video production into hours, making it practical for teams to maintain fresh, localized content across all their communications.

Can digital humans speak multiple languages for global audiences?

Digital humans in Synthesia can communicate fluently in over 140 languages and accents, making them ideal for global business communications. The platform combines AI voice generation with precise lip-syncing and culturally appropriate gestures, ensuring your digital presenter appears native in each language rather than dubbed. You can create content once and translate it instantly, maintaining consistent messaging while adapting to local markets.

This multilingual capability extends beyond simple translation to include voice cloning, where your digital twin can speak languages you don't know while maintaining your unique voice characteristics. Teams use this to deliver training in employees' native languages, create region-specific marketing content, and ensure customer support feels personal regardless of location. The result is truly global communication that maintains the human connection essential for building trust across cultures.

How does Synthesia ensure consent and transparency when deploying digital humans?

Synthesia implements strict consent protocols to prevent unauthorized use of anyone's likeness or voice. Before creating a personal avatar, individuals must provide explicit consent through either in-person verification for studio captures or secure online processes for webcam recordings. The platform verifies not just that someone is human, but that they are the specific person whose likeness is being captured, preventing non-consensual deepfakes.

For deployment, Synthesia advocates clear AI disclosure so viewers know when they're watching AI-generated content. The platform includes watermarking capabilities and maintains provenance logging to track content creation and usage. These safeguards, combined with policy filters that prevent inappropriate content generation and human review requirements for sensitive topics, ensure digital humans enhance rather than deceive in business communications. This ethical framework builds trust while leveraging the efficiency benefits of AI video technology.