Create high-quality L&D content up to 90% faster.

It’s Monday morning. You’re scanning the status of half-finished work. Onboarding needs an update. A team training request is waiting on SME review. A compliance module is sitting with a vendor for localization.

Then your manager pings you to review resourcing. Bottom line: make learning impactful. Do it with less time, less budget, and less headcount.

That’s why teams are turning to AI to standardize and scale content production.

We’ll show you how to ship faster and iterate more often while keeping quality consistent, so you can deliver role-relevant learning in the flow of work at the scale the business expects.

📊 What the data says

We surveyed 421 L&D professionals in November 2025 for our AI in Learning & Development Report (2026).

Here's what we found:

- Speed is the #1 reason teams use AI: 84% say speeding up production is the biggest incentive.

- Time saved is the clearest value today: 88% report value via time saved on content creation.

- The next wave shifts from “faster” to “better": Teams expect the biggest gains in personalization (72%), wider reach (65%), engagement (56%), business impact (55%), and localization (54%).

Where content production gets stuck

Most training is communication. It’s how organizations translate decisions into behavior. What changed, what matters, and what people need to do next.

The challenge is that the message keeps moving. Policies shift. Tools update. Processes evolve. Priorities change. In traditional content production systems, every change triggers rework, restarts review cycles, and generates new requests. You are not just maintaining content. You are constantly producing new content to keep pace with the business.

This problem compounds in global organizations. A single policy update can turn into multiple versions across regions and languages. Localization becomes part of the critical path, and delays in any step mean teams ship late everywhere.

Why learning in the flow of work raises the standard

When someone is mid-task, they’re not looking for a course. They’re looking for a clear answer they can use right now. That’s the standard behind learning in the flow of work.

Research on workflow learning points to the same idea: support is most effective when it shows up in the moment of need and helps people execute the task, not just understand the concept.

In practice, that changes what “good output” looks like. Flow-of-work learning is smaller, easier to search, and easier to update. For example:

- A 60–90 second “what to do next” clip for a common process

- A “common mistakes” troubleshooting explainer

- A policy update recap that highlights what changed and what to do now

- A guided walkthrough for a critical SOP or tool

When L&D can produce these assets quickly, content stays current, findable, and usable. That’s how speed turns into effectiveness: the right support reaches people at the moment work is happening.

Guiding principles for speeding up production

Teams that streamline content production build a workflow that moves from request to release with minimal friction. It’s easy to repeat, easy to review, and easy to update. The principles below are the core of that system.

- Standardize intake so requests arrive ready to build: Capture audience, outcome, source of truth, constraints, and publish surface in a consistent brief.

- Separate drafting from SME validation so reviews stay bounded: AI drafts quickly; SMEs validate accuracy and risk; L&D owns clarity, structure, and practice.

- Design for moment-of-need delivery from the start: Decide early where people will use it and design for small, searchable outputs.

- Build in chunks so change stays local: Structure content so updates replace one piece, not the whole asset.

- Template patterns so quality scales consistently: Reuse layouts, scene structures, and script formats so every new build starts from a proven pattern.

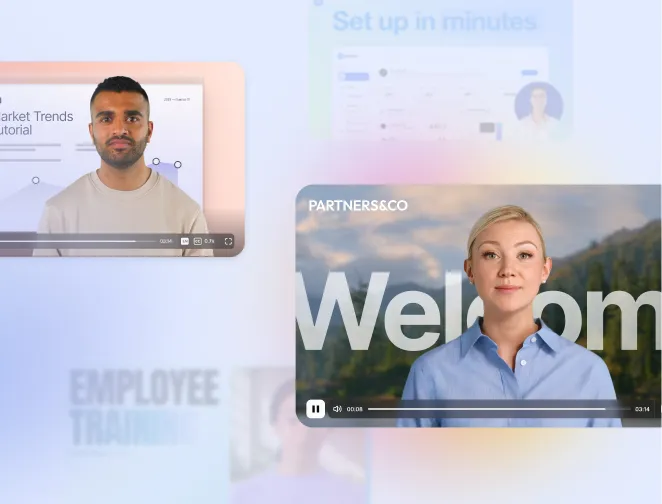

How to create a video in Synthesia

One practical way to apply these principles is to build in Synthesia.

In this workflow, AI produces the first draft, SMEs validate accuracy and risk, and L&D designs the structure and practice moments.

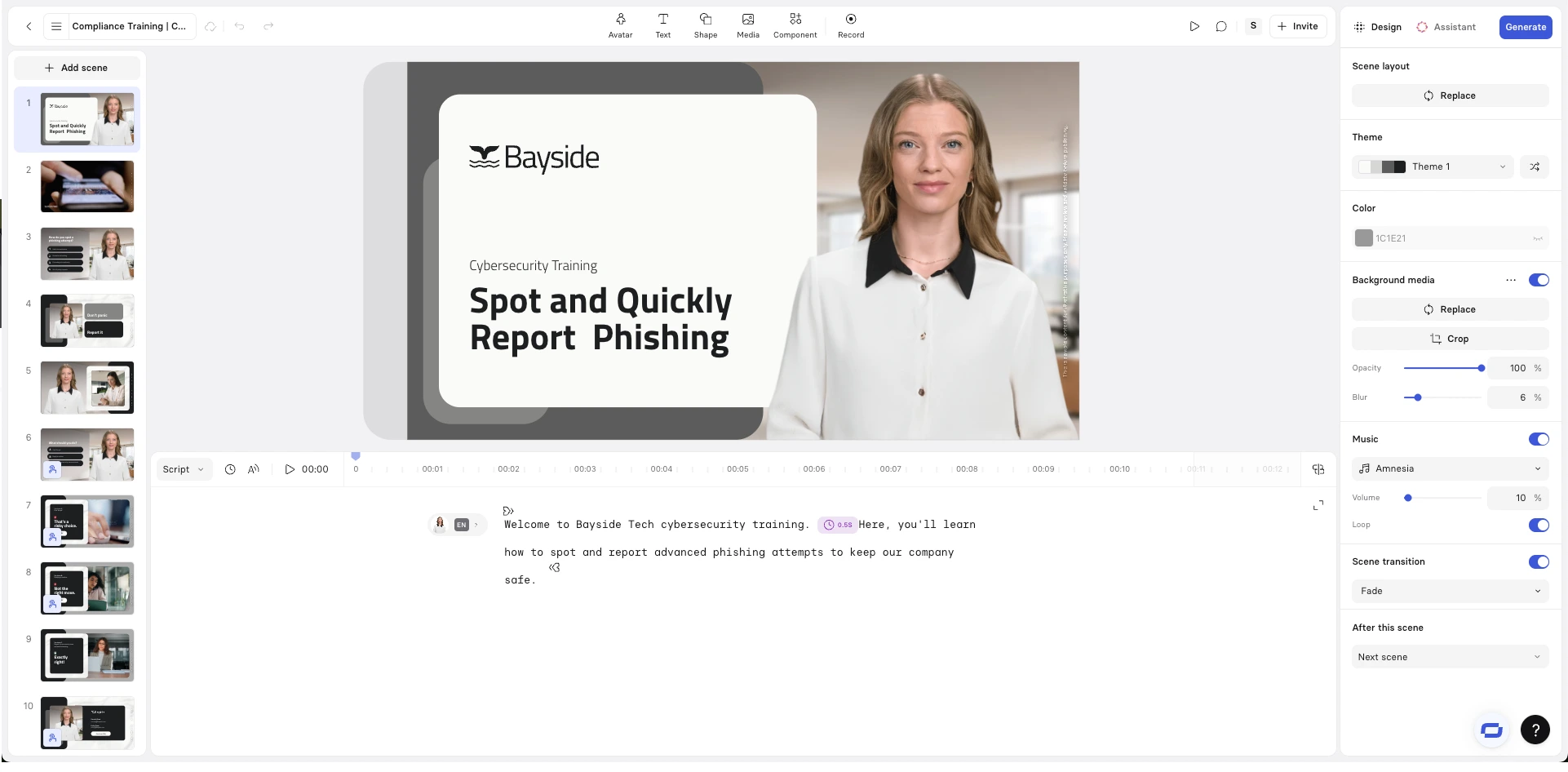

Step 1. Create a first draft

Start from a template like the one below, or head to our AI video generator to start from a text prompt, a PDF, a PPT, or a script. Or begin from scratch.

Step 2. Validate with SMEs (time-bound)

Send a draft pack (scene list + script) for SMEs to validate accuracy, required wording, and risk, so feedback stays focused and fast.

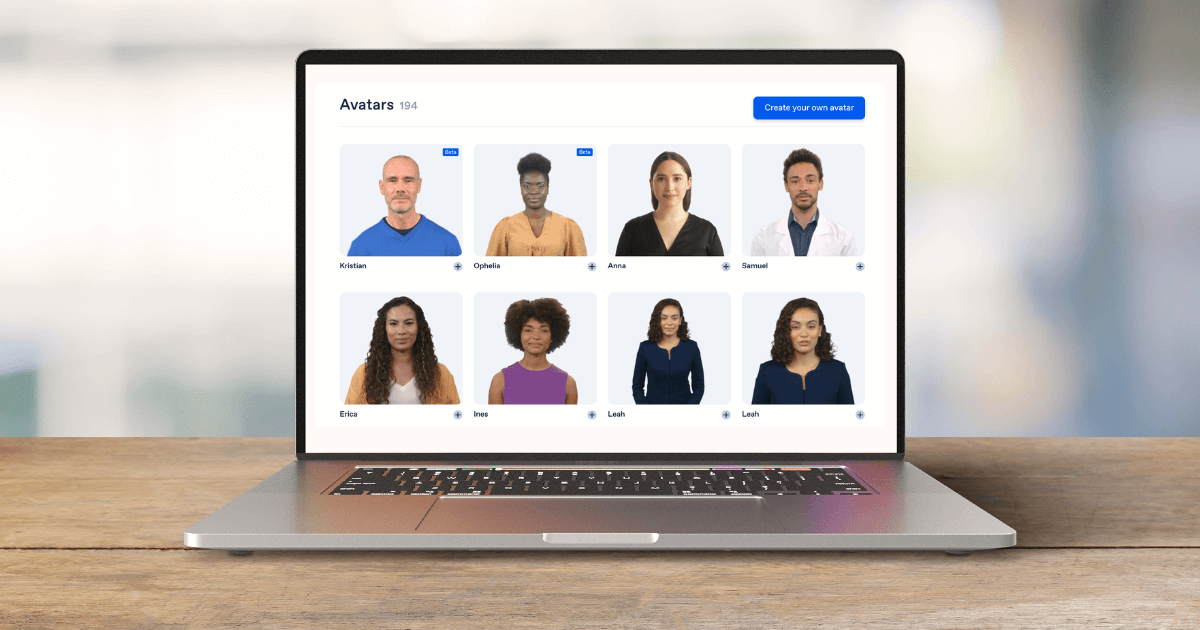

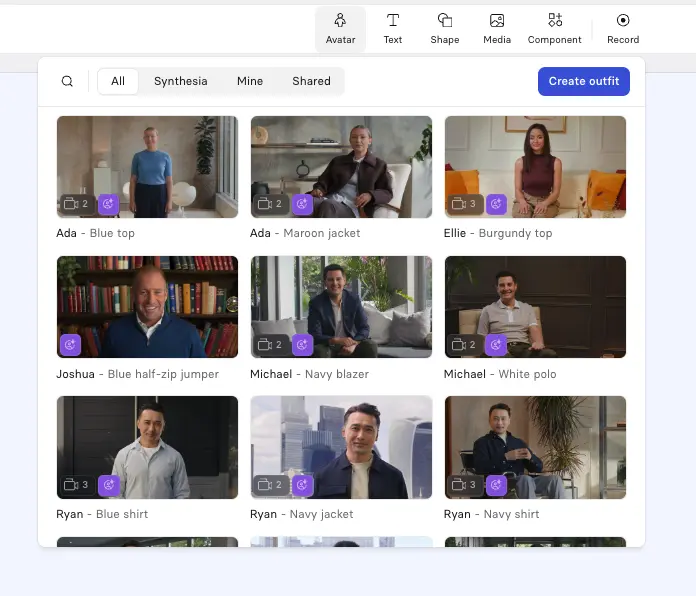

Step 3. Choose an avatar and voice

Select the AI avatar presenter style, language, and voice that fit your audience.

Step 4. Design your scenes

Build in short chunks using branded layouts and supporting visuals so updates stay small.

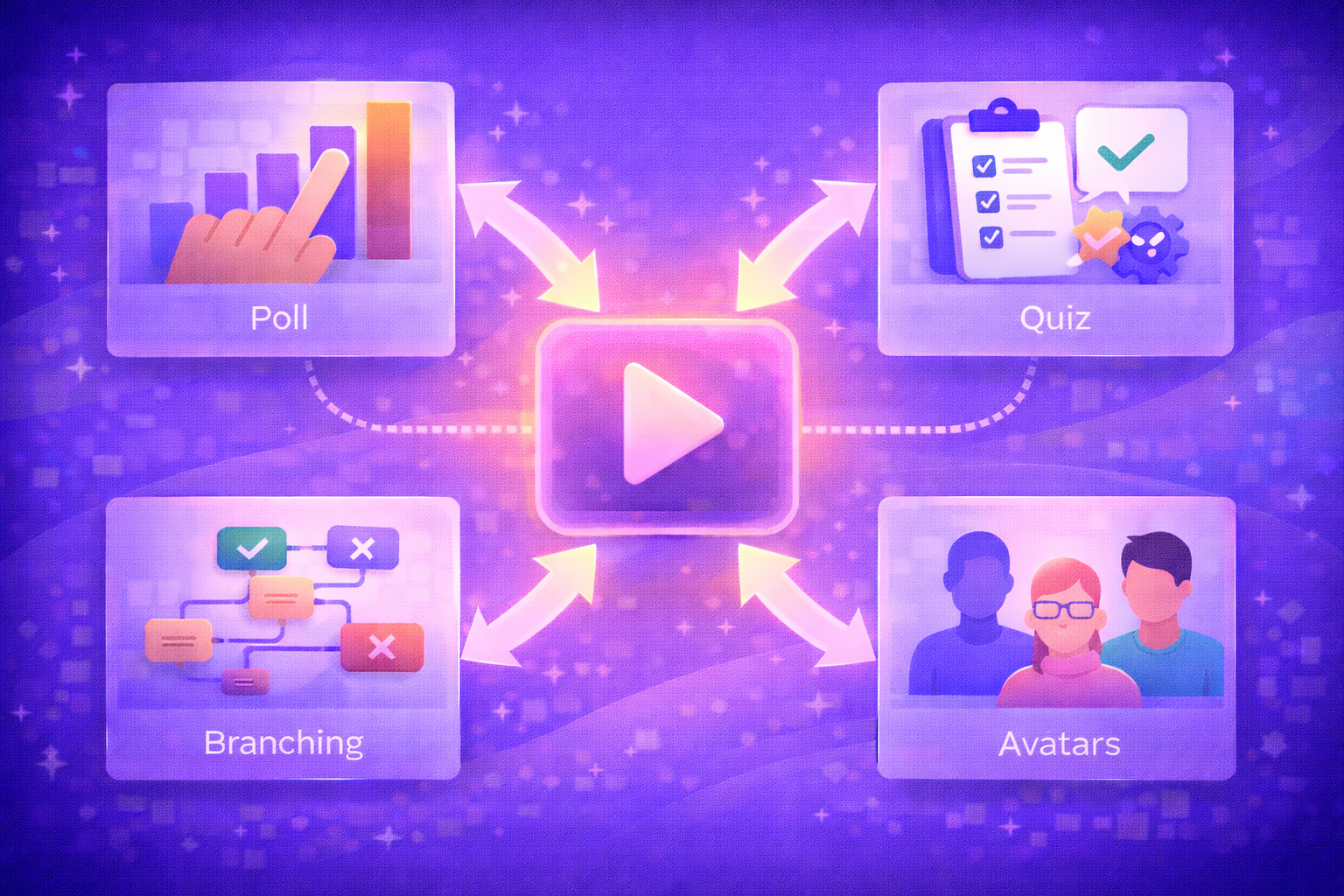

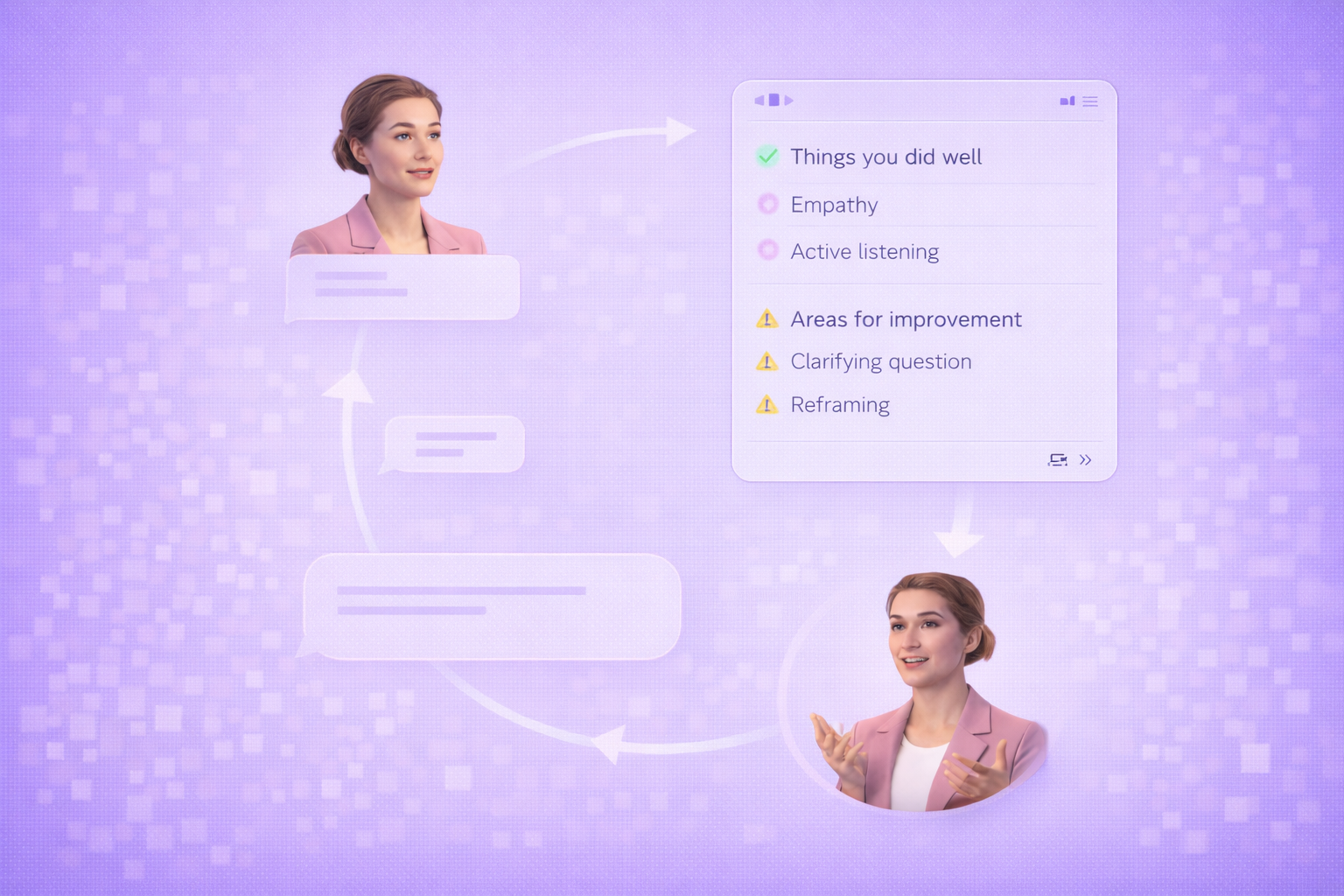

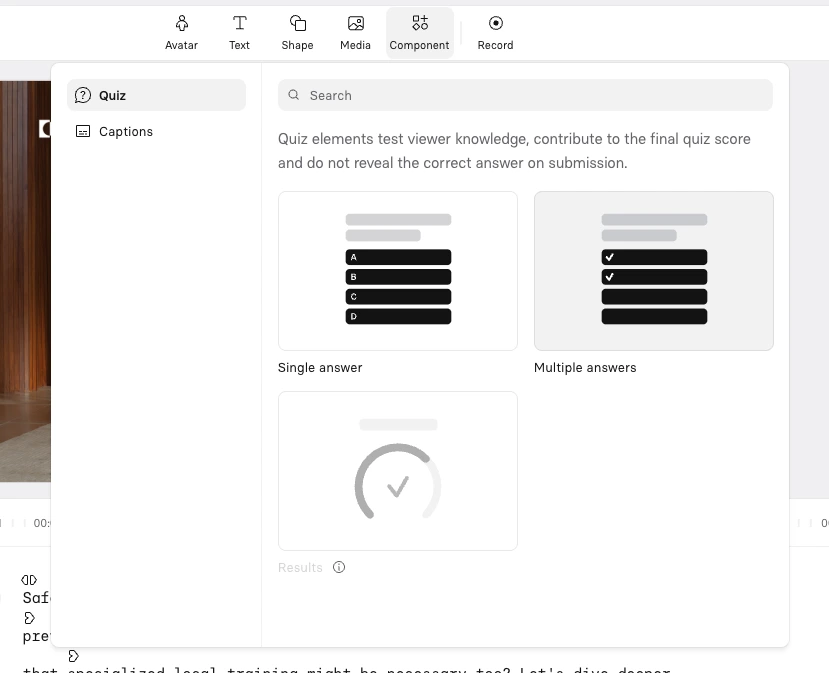

Step 5. Add interactivity

Use a quick check or scenario to turn viewing into practice.

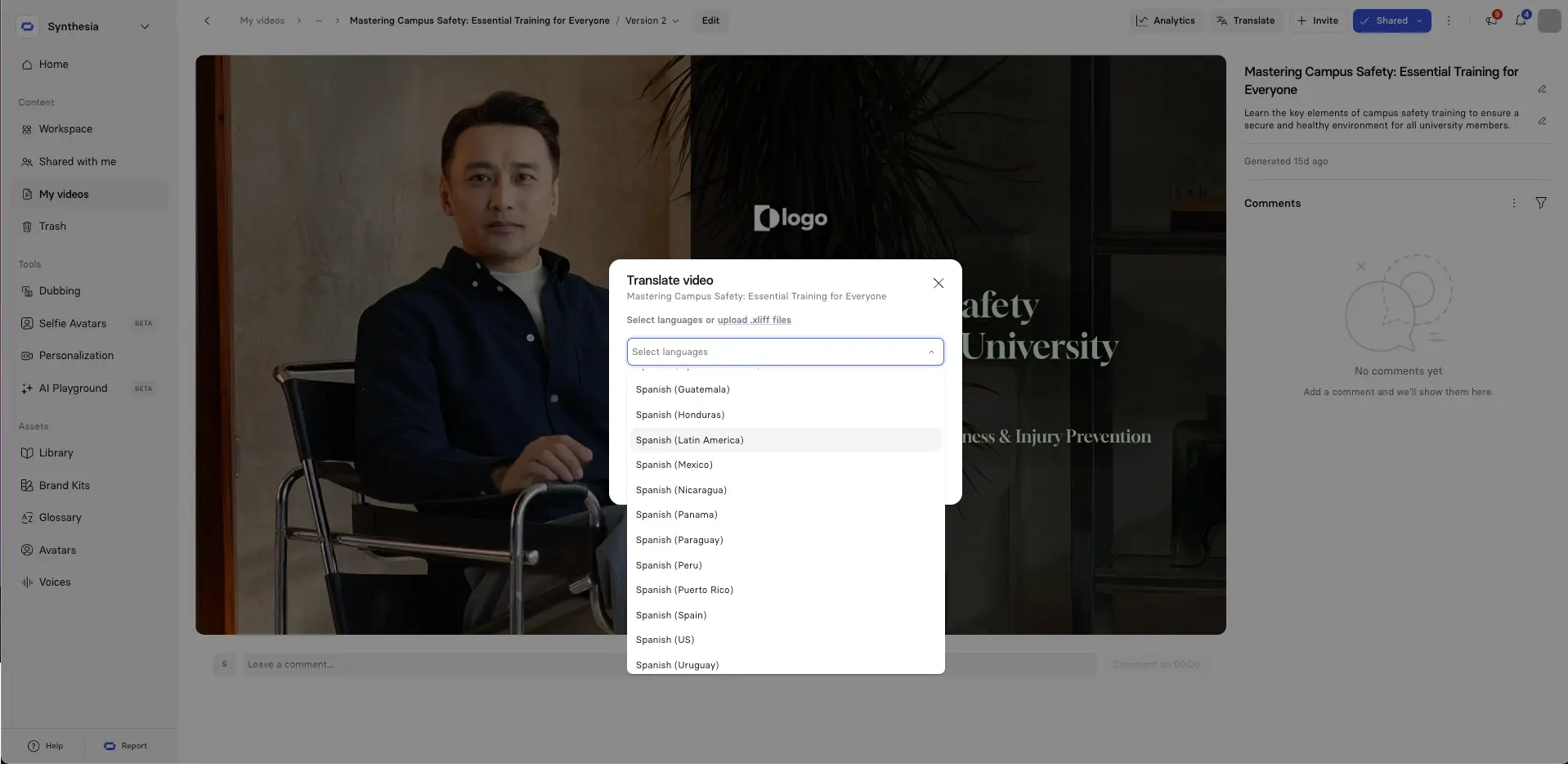

Step 6. Localize and scale

Generate subtitles and language versions without rebuilding the video.

Step 7. Preview and refine

Tighten pacing, clarity, and scene flow before publishing.

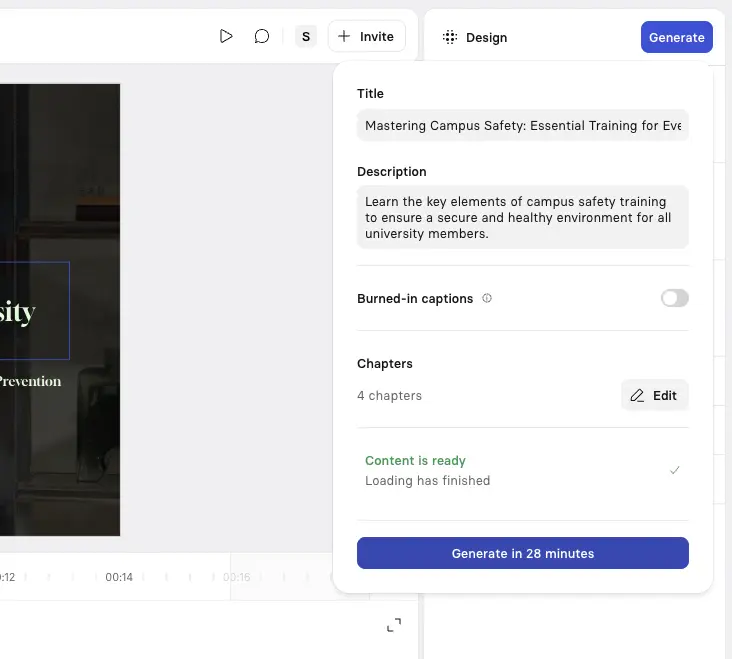

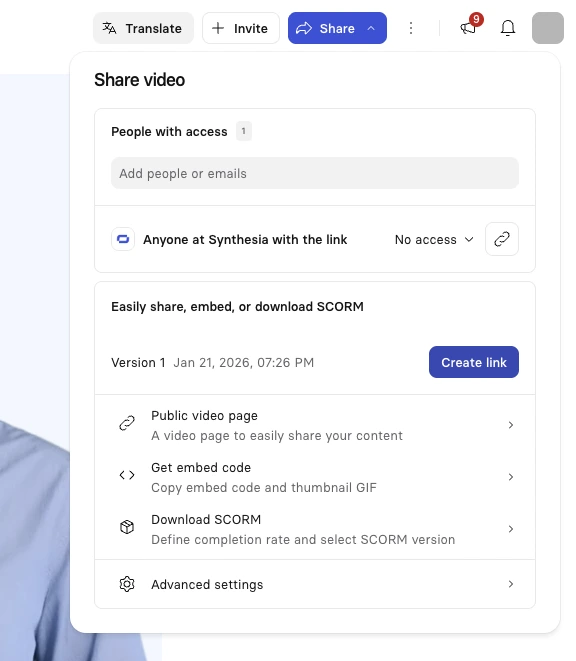

Step 8. Generate and publish

Publish where it will be used, then iterate as requirements change.

Update anytime to reflect new policy changes or learner feedback, and keep a clean version history while you do it.

That’s the quality-and-speed unlock: content stays current, variants are easy to manage across roles and regions, and support shows up where people actually work. That’s how L&D becomes more effective, not just more productive.

💡 Tip: Enterprise teams can partner with our Solutions team to build APIs that automate content creation at scale, like generating a personalized onboarding video for every new hire.

Publishing and measurement

Publish based on the job-to-be-done. Use the LMS when completion evidence is the requirement. Use flow-of-work channels (Slack or Teams, an intranet, a knowledge base) when the goal is in-the-moment performance support.

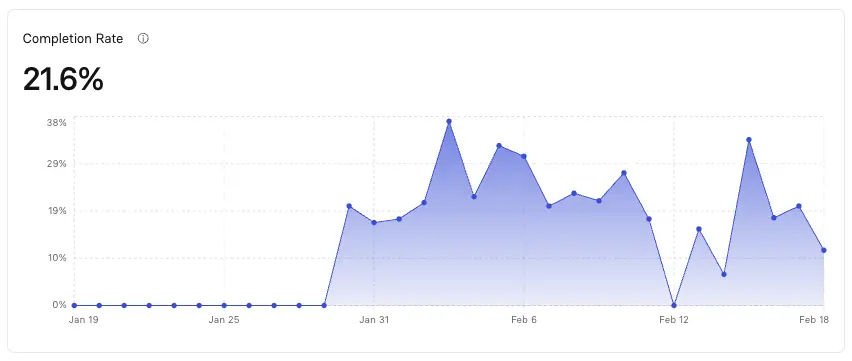

Measure three things so you can tell whether speed translated into impact:

- Adoption: views or completion

- Learning: scenario or quick-check accuracy

- Performance: one operational metric (error rate, ticket volume, rework, cycle time)

💡 Tip: Any rollout should include some measurement, even if it’s lightweight. Tie the performance metric to a real business or capability goal, not just completion rates.

About the author

Learning and Development Evangelist

Amy Vidor

Amy Vidor, PhD is a Learning & Development Evangelist at Synthesia, where she researches emerging learning trends and helps organizations apply AI to learning at scale. With 15 years of experience across the public and private sectors, she has advised high-growth technology companies, government agencies, and higher education institutions on modernizing how people build skills and capability. Her work focuses on translating complex expertise into practical, scalable learning and examining how AI is reshaping development, performance, and the future of work.

How can L&D teams speed up content production?

Standardize the pipeline: intake → outline → script → build → review → publish → update. Use AI for first drafts and repetitive production work, then use checklists and templates to keep quality consistent across authors and teams.

What are the biggest bottlenecks in L&D content production?

Three things slow teams down most: unclear requests, SME review churn, and late changes to “source of truth.” Fix these with a scoped intake form, one accountable owner for accuracy, and a defined approval lane (what SMEs must approve vs what they can ignore).

Where does AI save the most time in L&D production?

AI saves time where work is repeatable: drafting outlines/scripts, creating quizzes/checks, turning text into scenes, voiceover, and translation/localization. It saves less time where judgment is required: choosing the right examples, sequencing practice, and validating accuracy.

How do you use AI without lowering training quality?

Use AI to draft, then enforce quality with a short QA checklist: one message per scene, no duplicated on-screen text, required steps are explicit, decisions include feedback, and every claim matches an approved source. Treat SMEs as validators of accuracy, not co-writers.

What’s the best workflow for SME reviews and approvals?

Make review structured and bounded. Ask SMEs to answer three questions only: Is it accurate? Is anything missing that would cause failure on the job? Is any wording legally/compliantly required? Everything else (style, pacing, examples) stays with L&D.

How do you scale training updates when processes change?

Build in modules you can swap: short scenes + reusable templates + a version log (owner, last updated date, change trigger). Update the scenes that changed, not the whole course, and publish updates where the work happens.

How do you localize training content faster?

Design for localization: short scenes, stable terminology, minimal idioms, and a single glossary. Translate once, then reuse across formats. Keep visuals and on-screen text easy to swap without re-editing the full asset.

What should L&D teams measure when they “ship faster”?

Measure three things: adoption (views/completions), learning (scenario or knowledge-check accuracy), and performance (one operational metric: error rate, ticket volume, rework, or cycle time). If the operational metric doesn’t move, faster production didn’t improve capability.