Create AI videos with 240+ avatars in 160+ languages.

Create engaging training videos in 160+ languages.

At scale, training breaks down. Messages drift across teams and regions, updates take too long to roll out, and content quickly becomes outdated.

Training videos help bring clarity and consistency back into the system, especially when the same message needs to reach people across roles, locations, and time.

Until recently, creating training videos was resource-intensive. Studios, cameras, voiceovers, and long production cycles turned even small updates into major projects.

Synthesia removes those constraints. That’s why over 90% of the Fortune 500 trust Synthesia to create and maintain training at scale.

Option 1: Convert existing training materials into videos

If you already have training content, this is the fastest way to get started. Instead of beginning from a blank page, you can turn existing materials (PDFs, Word docs, PowerPoint decks, or URLs) into training videos in minutes.

This approach is called assisted creation, and it’s how most corporate training videos are made in Synthesia. It removes the friction of rewriting content for video and helps you reach a strong first draft quickly.

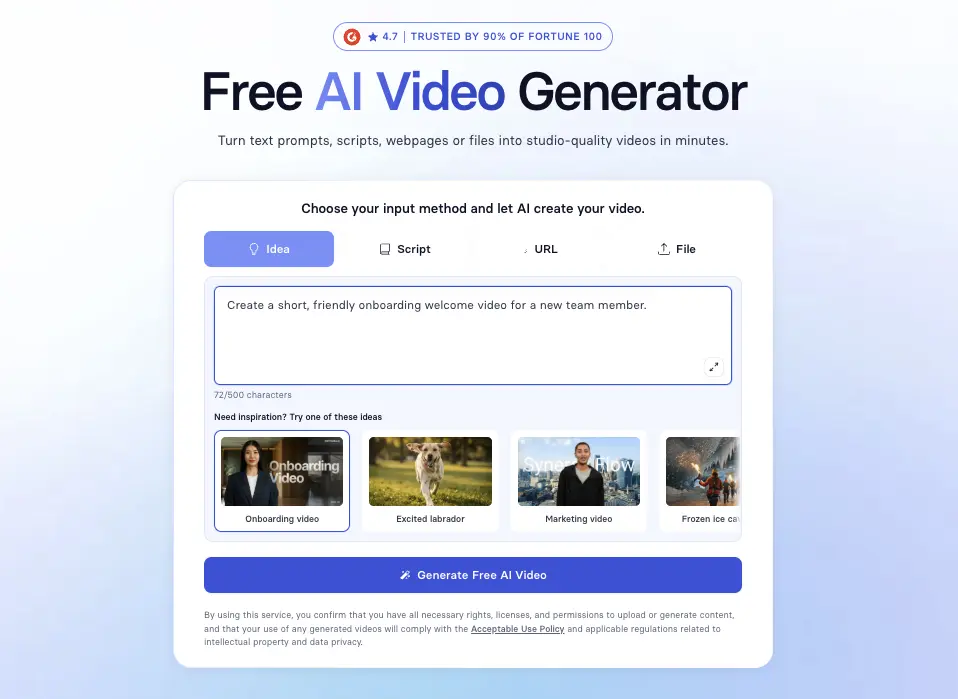

Step 1: Go to Synthesia's AI video generator

Head to Synthesia's AI video generator.

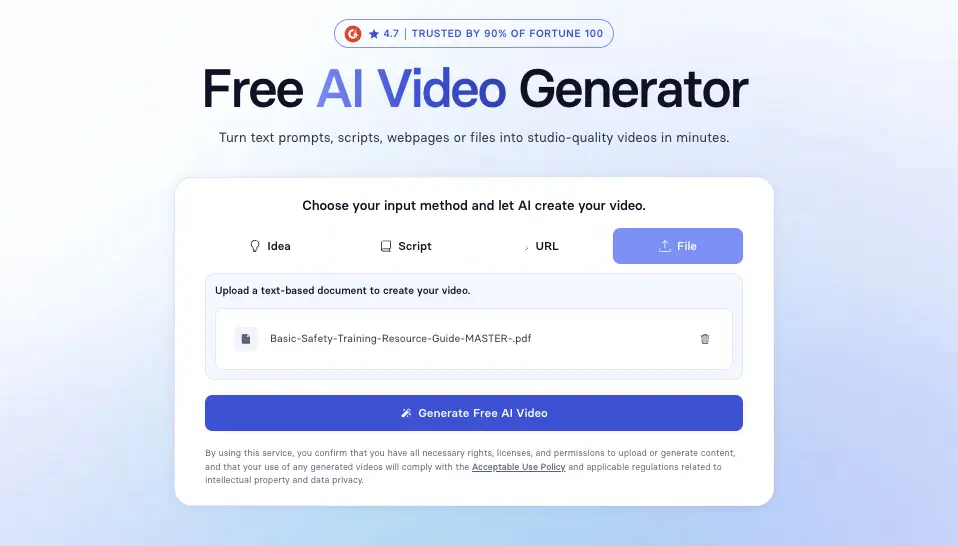

Step 2: Select the File tab and upload your training materials

Select the File tab and upload your training materials as PDFs, PowerPoint slides, Word documents, or text files.

You can also use the URL tab to import content from a webpage, paste a video script into the Script tab, or enter a simple prompt in the Idea tab to get started.

When you're ready hit Generate.

Step 3. Sign up to Synthesia for free

Sign up for a free Synthesia account.

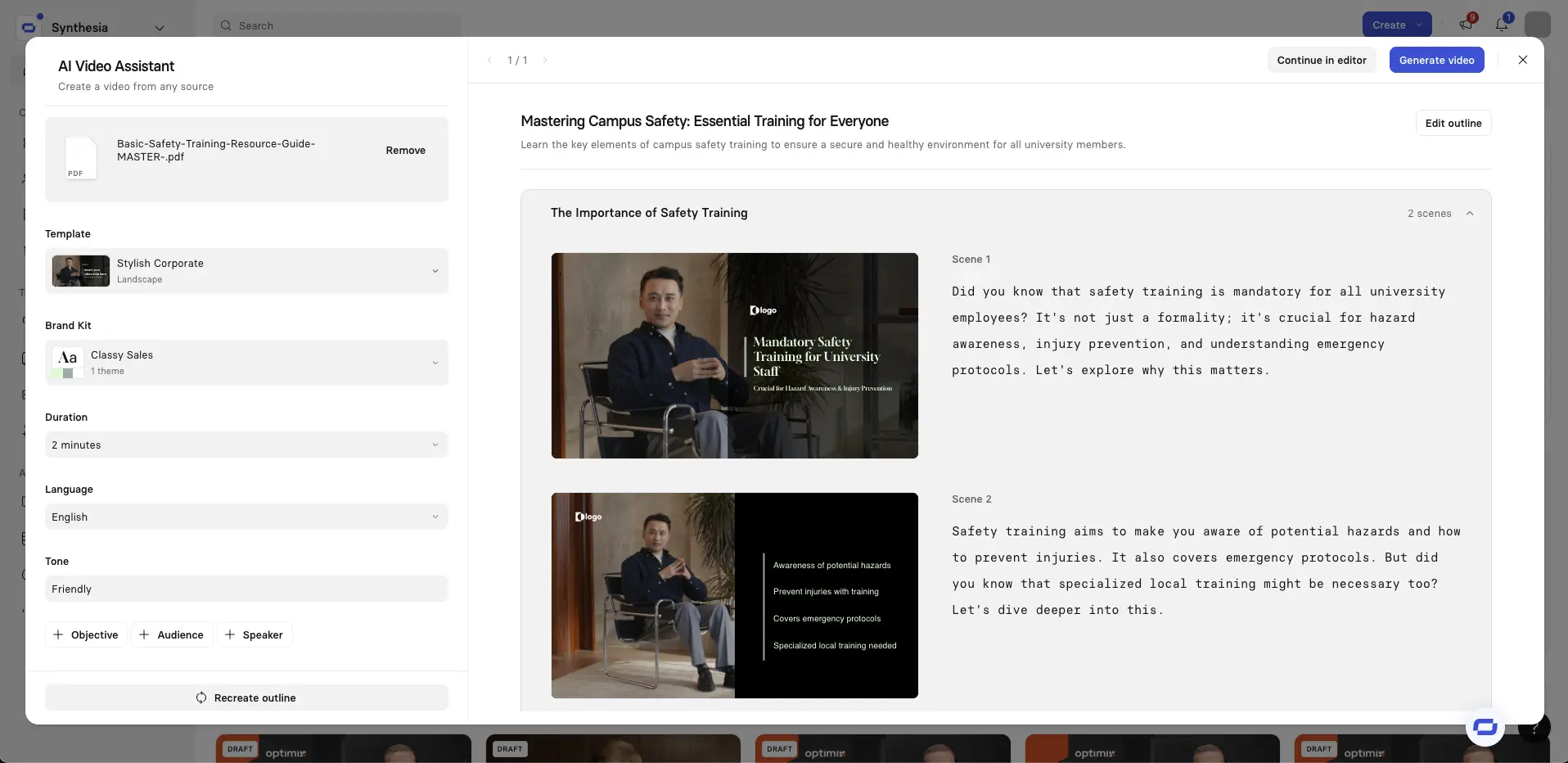

Step 4: Outline your training video

You’ll now see an overview of your video’s scenes along with a draft script for each one.

From here, you can change templates, adjust settings such as video duration, objective, and language. add, remove, or edit scenes, or recreate the outline entirely.

When you’re ready, click Continue in editor.

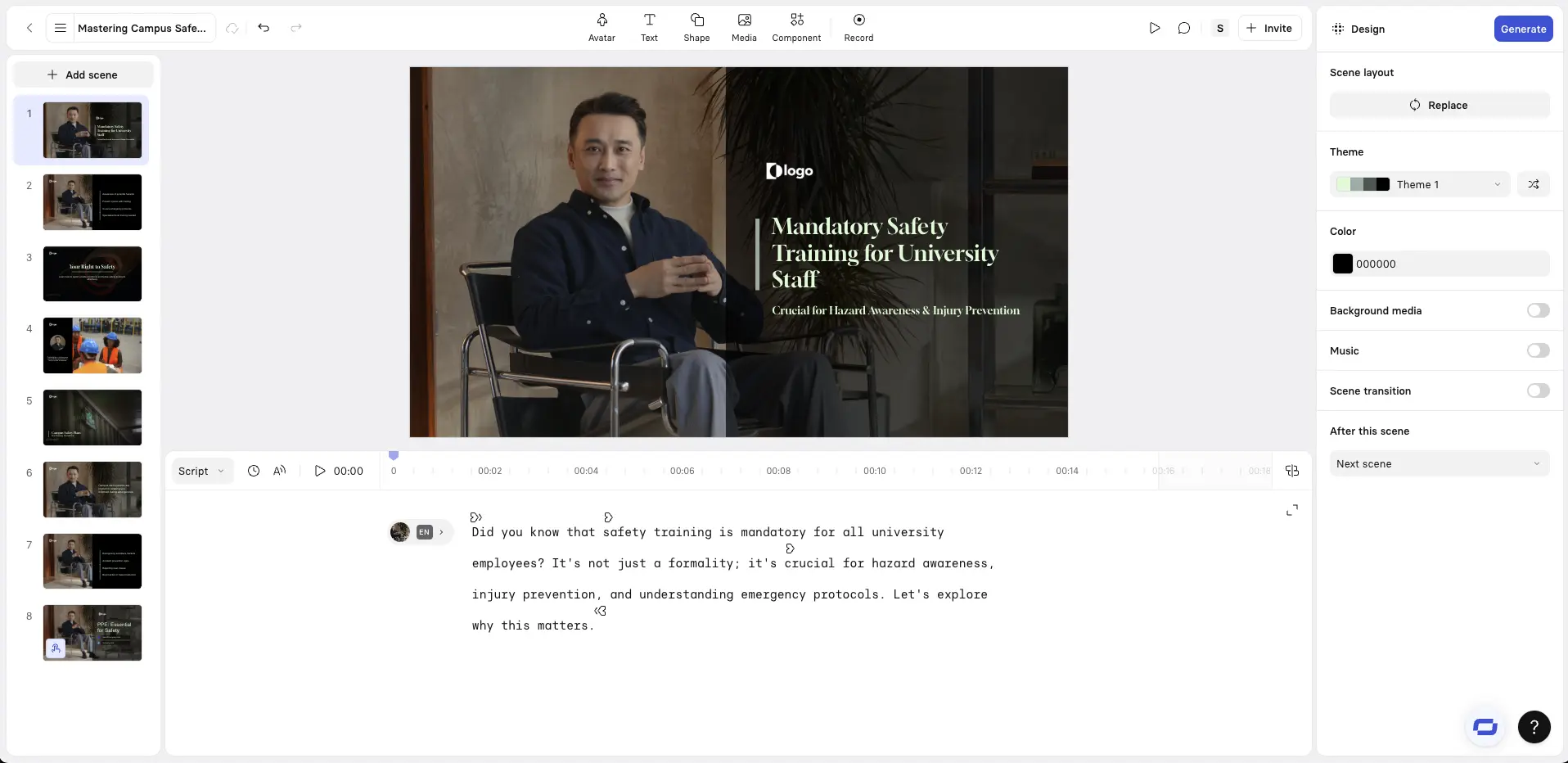

Step 5: Edit your training video

Now it's time to edit your training video. You can review your scenes, refine the script, and assemble all multimedia elements into a complete video.

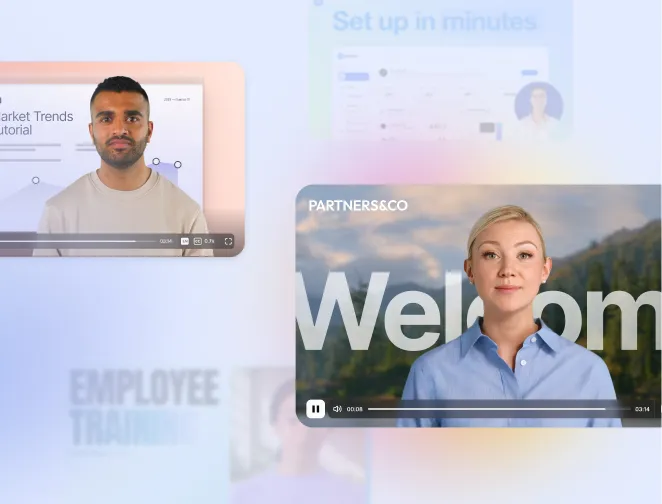

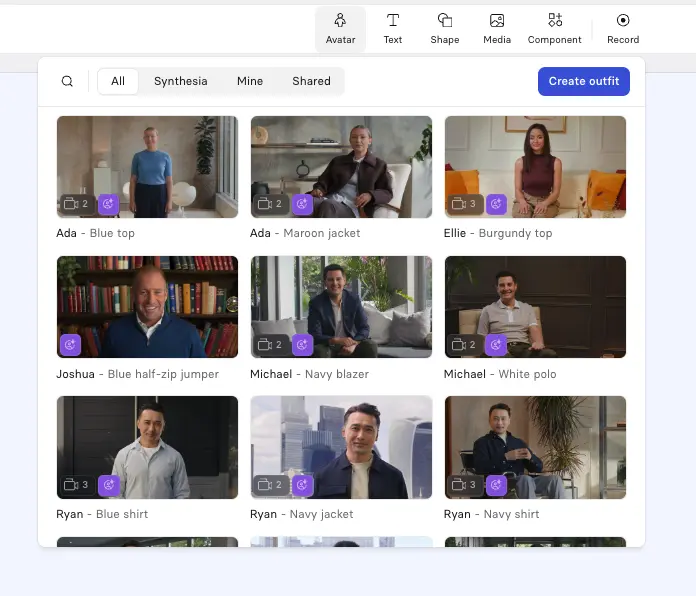

Choose an AI avatar and voice

You can select from a wide range of AI avatars, AI voices, languages, and accents to match your audience and context.

Add screen recordings

Use Synthesia’s AI screen recorder for software tutorials and walkthroughs. A common layout pairs a talking-head avatar with a screen recording, with the avatar on one side and the screen on the other.

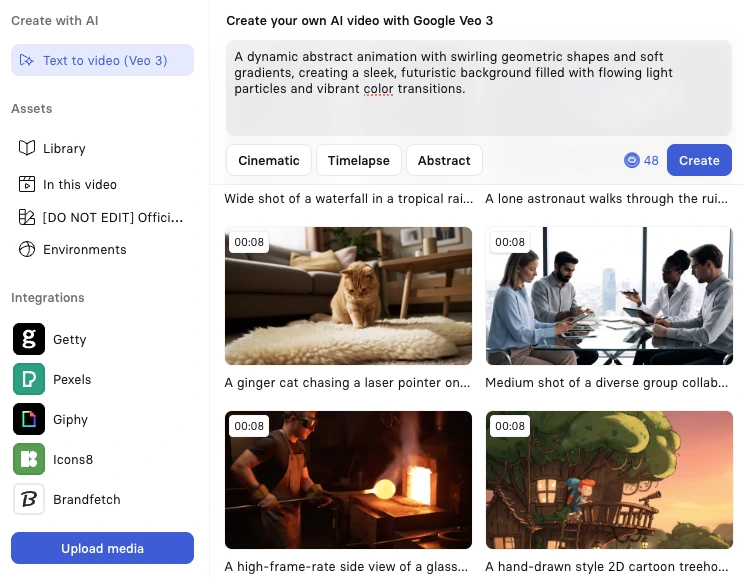

Add B-roll

B-roll helps break up long talking-head sections and keeps training videos visually engaging. In Synthesia, you can place clips between sections or layer them behind your avatar or voiceover to reinforce key points.

B-roll works well for showing real-world examples, people performing tasks, or visuals that support the narration. You can generate clips with AI video models like Sora or Veo, upload your own footage, or use Synthesia’s built-in stock library.

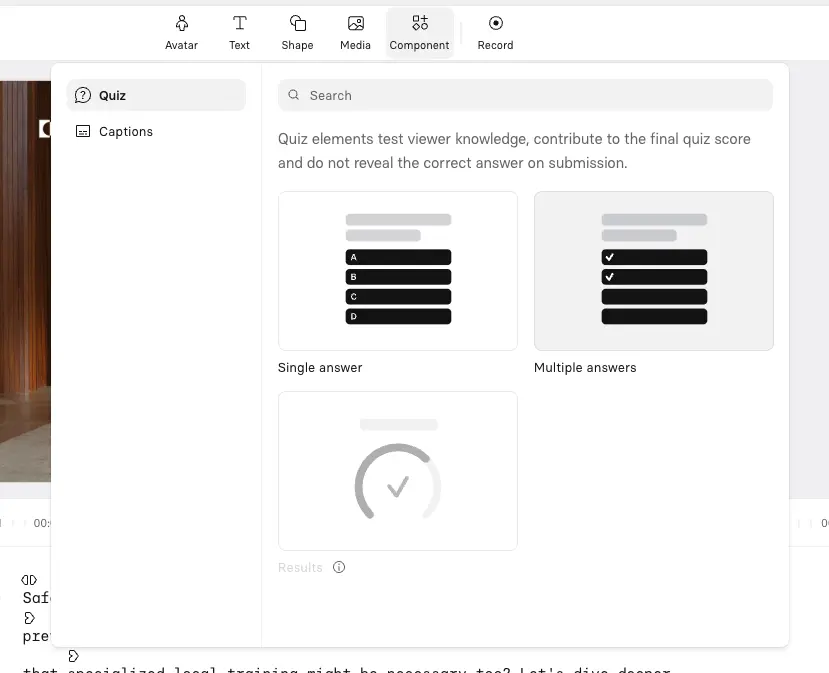

Add interactivity

Add interactive elements such as quizzes, branching scenarios, and clickable buttons to keep learners engaged. For example, short knowledge checks after each section or role-based branching options allow learners to explore scenarios that are relevant to their role.

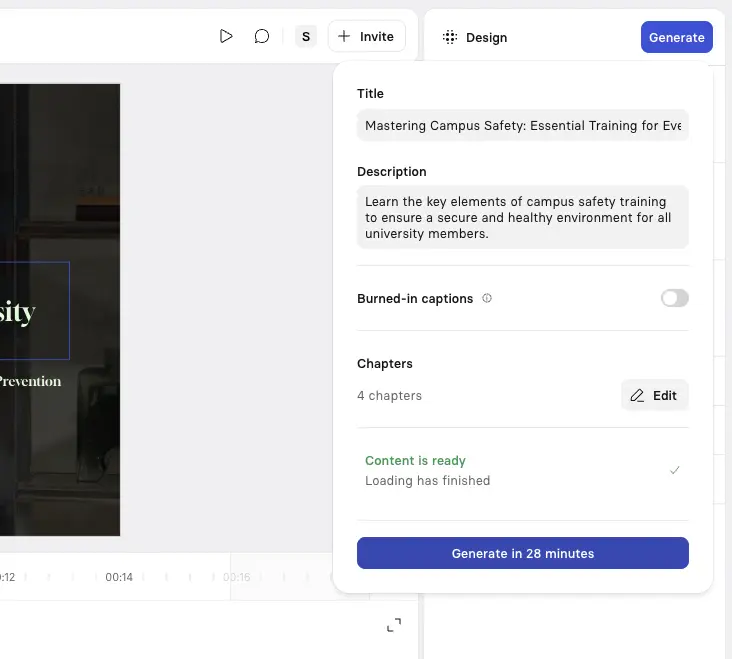

Step 6: Generate your video

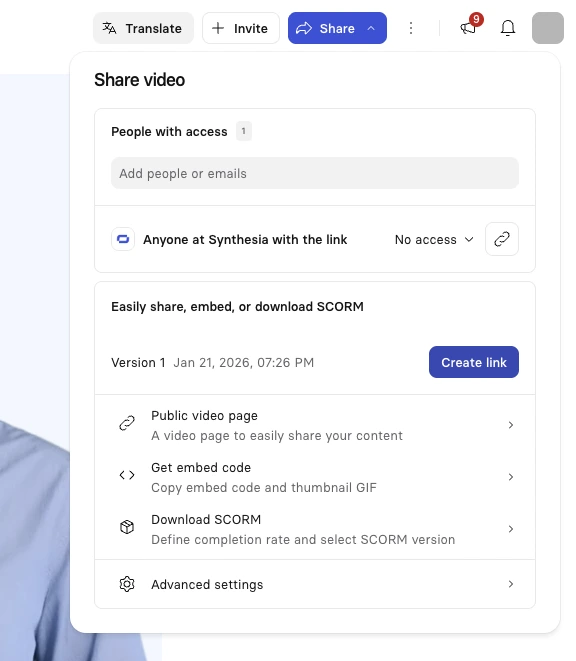

Click Generate in the top-right corner to create your video.You can then download your training video as an MP4, get a shareable link, embed your video on a webpage, or download a SCORM version of your video and upload it to your LMS.

Step 7: Publish and share your video

The final step is to publish and share your video. Most teams distribute training videos through an LMS, company intranet, or internal communications channels.

Synthesia lets you export your video as an MP4 file, or publish it within the platform, allowing you to embed the video wherever it’s needed.

Option 2: Starting from a training video template

Templates are the next most common way teams create training videos in Synthesia.

Instead of starting from existing training materials, you begin with a proven structure that defines the flow, purpose of each scene, and pacing of information. This makes templates especially useful for maintaining consistency across training programs such as onboarding or compliance.

Because the format is already designed around how people learn, templates also help boost engagement while keeping production fast and repeatable.

Step 1: Log in to Synthesia

Click here to log in or to sign up for a free account.

Step 2: Choose a training video template

Synthesia offers a wide selection of training video templates to help you get started—from microlearning formats to scenario or setting-based templates.

You can browse all available training video templates in your Synthesia dashboard by going to Templates and selecting the Training tag.

Training video examples (editable templates)

Below is a selection of my favorite training video templates covering the most common training topics.

Standard training

Clear, instructor-led learning videos that walk viewers through concepts, processes, or procedures in a structured, step-by-step way. Ideal for onboarding, compliance, and skills training, where the goal is to explain information clearly and consistently, with strong guidance and pacing.

Interactive training

Interactive videos are engaging, two-way learning experiences that include quizzes, branching scenarios, or clickable elements to keep learners actively involved. These videos help improve retention by letting employees make decisions, receive feedback, and learn by doing rather than just watching.

Onboarding

Introduction and orientation for new employees or users, helping them understand the organization’s mission, tools, and workflow. These videos set the tone for a warm, structured, and consistent onboarding experience.

Standard Operating Procedure (SOP)

Step-by-step visual guides that document how key processes should be performed in a consistent and compliant way. Video SOPs help teams follow the same workflows, reduce errors, and preserve operational knowledge across roles and locations.

Compliance training

Legal, safety, and regulatory requirements explained clearly to ensure employees understand critical policies. Often includes quizzes or interactive elements to confirm comprehension and adherence.

Cybersecurity training

Cybersecurity training videos protect your organization from digital threats such as phishing, password attacks, and data breaches. These videos teach security best practices, safe system usage, and company policies, helping reduce human error.

Product demos

Product demo videos show how your product works in real-world scenarios, walking viewers through features and use cases. Commonly used in marketing, onboarding, and customer support to highlight value quickly.

Technical skills training

Job-specific, hands-on instruction for using specialist software, tools, or equipment. Ideal for upskilling teams in fast-evolving technical roles.

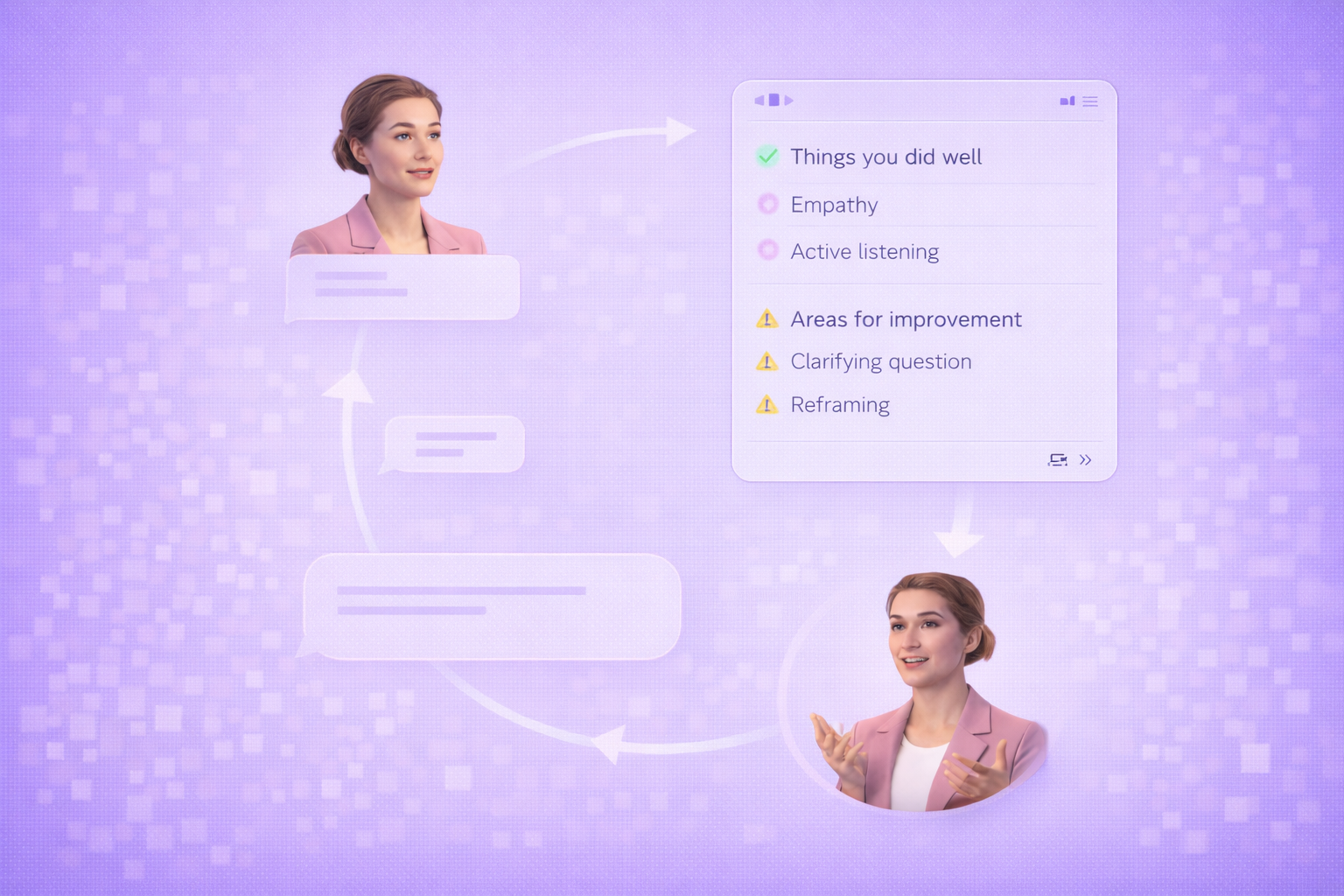

Customer service training

Customer service training videos provide best practices for client interactions, from communication skills to handling objections. Often includes realistic role-play scenarios to build confidence and consistency.

Health and safety

Workplace health and safety training videos, including emergency procedures and hazard prevention. Essential for industries where compliance and risk reduction are critical.

Leadership development

Leadership training videos for managers and executives focused on decision-making, communication, and strategy. Often features expert insights and real-world case studies.

Scenario-based training

Simulated real-world situations where learners can practice problem-solving in a safe environment. Especially effective for high-stakes or complex decision-making.

Why video is ideal for employee training

1. Higher engagement and easier information consumption

Video combines visual and auditory cues, making information easier to process and revisit. A survey from Synthesia show that the vast majority of respondents perceive video as an effective format for learning and recall. In practice, learners often return to short video explanations when they need quick refreshers.

2. Cost savings

Employee training videos can significantly reduce the cost of in-person training by replacing repeated sessions with reusable content. For example, Microsoft reported a 95% reduction in L&D expenses after launching an internal video portal, reducing costs from $320 to $17 per employee. AI-generated training videos can amplify these savings further by removing the need for studios, actors, reshoots, and manual translation.

3. Simplified maintenance

Unlike traditional video production, AI video platforms make it easy to update existing content as processes change. Teams can modify scripts, visuals, or language without re-recording entire videos, helping training stay accurate and relevant over time.

When does it make sense to use a training video?

Training videos are particularly effective when clarity, consistency, and scale matter. Common scenarios include:

- Onboarding new employees or clients: Videos help standardize onboarding while allowing new joiners to learn at their own pace.

- Launching a new product or software: Short walkthrough videos can reduce the learning curve and support faster adoption than dense documentation.

- Ensuring consistency across teams: Videos help communicate standards—such as brand guidelines or operating procedures—clearly and consistently across regions.

- Scaling training efficiently: Recording once and reusing content avoids repeating the same sessions for every team or hire.

- Building a self-serve knowledge base: Training videos work well as on-demand resources for both basic onboarding and advanced feature usage.

- Explaining concepts quickly: If the same questions keep coming up, a short video can save time and reduce interruptions.

Tips for effective training videos

- Keep your videos short and focused: Shorter videos consistently outperform long ones. Many teams aim to keep individual videos under six minutes to maintain attention and completion rates.

- Define clear learning objectives: Each video should support a single outcome: what learners should know or be able to do after watching.

- Tailor to your audience: Variations by role, region, or experience level help increase relevance and engagement.

- Choose the appropriate format and style: Talking-head videos combined with scenarios or demonstrations often work well for training, as learners relate more easily to real-world contexts.

- Incorporate human elements: Using familiar faces, conversational language, or avatars can help maintain a sense of connection, especially in distributed teams.

- Vary visuals to sustain interest: Relying on static slides alone can reduce engagement. Mixing text, animation, video, and screen recordings helps maintain attention.

- Craft concise introduction: Strong openings clarify what the video is about and why it matters within the first few seconds.

- Incorporate interactive elements: Quizzes, branching scenarios, and prompts encourage active participation and reinforce learning.

- Ensure multi-device accessibility: Mobile-friendly videos with clear visuals and readable text support learners who access training on the go.

- Regularly update content: Periodic reviews help ensure training content reflects current tools, processes, and priorities.

Most training video challenges aren’t about tools or formats. They come from how training is designed and maintained over time.

By treating videos as part of a system with clear goals, focused structure, and easy updates, teams can build training that scales.

About the author

Strategic Advisor

Kevin Alster

Kevin Alster is a Strategic Advisor at Synthesia, where he helps global enterprises apply generative AI to improve learning, communication, and organizational performance. His work focuses on translating emerging technology into practical business solutions that scale.He brings over a decade of experience in education, learning design, and media innovation, having developed enterprise programs for organizations such as General Assembly, The School of The New York Times, and Sotheby’s Institute of Art. Kevin combines creative thinking with structured problem-solving to help companies build the capabilities they need to adapt and grow.

What is a training video?

A training video is a video that educates the viewer on a topic and/or teaches a new skill. Training videos can be separated into two types: employee training videos and customer training videos.

For example, an employee training video can be used to introduce new employees to company policies and procedures, provide refresher training for experienced staff, or teach specialized skills, such as safety procedures or customer service techniques.

In this blog post, we're focusing on training videos for employees.

How long should a training video be?

The optimal length for a training video varies based on its nature, according to different studies.

Research on online instructional videos has shown that engagement rates are highest for videos of six minutes or less.

Meanwhile, for software instructional videos geared towards microlearning, the ideal length is suggested to be 60 seconds.

However, the most critical factor is to ensure the training video provides necessary information concisely without overwhelming the learner, regardless of whether its duration is one minute or six.

What should be included in a training video?

How you make a training video and what you include in there depends highly on the topic and type of training video.

The content of the video will depend on the topic and your company policies, as well as any relevant laws. If we disregard the legalities, the best tutorial videos mix educational content with engaging visuals and a touch of humor.

So include everything that is legally necessary, that applies to your company and its policies, and sprinkle a touch of personality and humor to make the video engaging to watch.